April 2020

这里有一个问题,教程中关于均方差损失函数的引用 4 公式前面的系数应该是

1/5 不是 1/2

1 reply

April 2020

在自动求导机制中,y 和 y_grad 的输出为什么不是 tensor 呢?

1 reply

April 2020

optimizer = tf.keras.optimizers.SGD (learning_rate=5e-4)

在 tensorflow 的程序示例中 learning_rate 和下文中 “ 在这里,我们使用了前文的方式计算了损失函数关于参数的偏导数。同时,使用 tf.keras.optimizers.SGD (learning_rate=1e-3) 声明了一个梯度下降 优化器 (Optimizer),其学习率为 1e-3。” 的 learning_rate 不一致

1 reply

April 2020

▶ snowkylin

谢谢大佬,我以为自己的基础没打牢,看来可以自信一点点了,感谢感谢

April 2020

大佬可否介绍一下 pycharm 环境的配置,申请了个 prof 版,环境不太会配置,以前都是用 colab

2 replies

April 2020

▶ David_Lee

PyCharm 的配置应该还是比较亲民的,网上的教程也很多。我在 “IDE 配置” 里也写了一些内容。如果还有问题可以具体提问。

2 replies

April 2020

▶ snowkylin

配置好了,但是每次运行的时候总是出现以下错误代码,是什么原因呢:

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:516: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_qint8 = np.dtype ([(“qint8”, np.int8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:517: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_quint8 = np.dtype ([(“quint8”, np.uint8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:518: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_qint16 = np.dtype ([(“qint16”, np.int16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:519: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_quint16 = np.dtype ([(“quint16”, np.uint16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:520: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_qint32 = np.dtype ([(“qint32”, np.int32, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:525: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

np_resource = np.dtype ([(“resource”, np.ubyte, 1)])

/home/fish/.local/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:541: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_qint8 = np.dtype ([(“qint8”, np.int8, 1)])

/home/fish/.local/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:542: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_quint8 = np.dtype ([(“quint8”, np.uint8, 1)])

/home/fish/.local/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:543: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_qint16 = np.dtype ([(“qint16”, np.int16, 1)])

/home/fish/.local/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:544: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_quint16 = np.dtype ([(“quint16”, np.uint16, 1)])

/home/fish/.local/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:545: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

_np_qint32 = np.dtype ([(“qint32”, np.int32, 1)])

/home/fish/.local/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:550: FutureWarning: Passing (type, 1) or ‘1type’ as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / ‘(1,) type’.

np_resource = np.dtype ([(“resource”, np.ubyte, 1)])

2020-04-25 11:25:56.201023: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Could not dlopen library ‘libcuda.so.1’; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory

2020-04-25 11:25:56.201044: E tensorflow/stream_executor/cuda/cuda_driver.cc:318] failed call to cuInit: UNKNOWN ERROR (303)

2020-04-25 11:25:56.201061: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (fish-desktop): /proc/driver/nvidia/version does not exist

2020-04-25 11:25:56.201256: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2020-04-25 11:25:56.235142: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 3591575000 Hz

2020-04-25 11:25:56.235842: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x5ba5910 executing computations on platform Host. Devices:

2020-04-25 11:25:56.235864: I tensorflow/compiler/xla/service/service.cc:175] StreamExecutor device

2 replies

April 2020

大佬你好, 我想请问一下我遇到的这个问题怎么解决:

InvalidArgumentError Traceback (most recent call last)

<ipython-input-24-dfbd31710005> in <module>

11 for e in range (num_epoch):

12 with tf.GradientTape () as tape:

---> 13 y_pred = a * X + b

14 loss = tf.reduce_sum (tf.square (y - y_pred))

15 grads = tape.gradient (loss, variables)

InvalidArgumentError: cannot compute Mul as input #1 (zero-based) was expected to be a float tensor but is a double tensor [Op:Mul] name: mul/

看上去像是数据类型的问题, 应该怎么解决呢?

2 replies

April 2020

import tensorflow as tf

X = tf.constant ([[1., 2.], [3., 4.]])

y = tf.constant ([[1.], [2.]])

w = tf.Variable (initial_value=[[1.], [2.]])

b = tf.Variable (initial_value=1.)

with tf.GradientTape () as tape:

L = tf.reduce_sum (tf.square (tf.matmul (X, w) + b - y))

w_grad, b_grad = tape.gradient (L, [w, b]) # 计算 L (w, b) 关于 w, b 的偏导数

print (L, w_grad, b_grad)

上面这段执行完后报错如下,请赐教如何修改,谢谢。

2020-04-26 16:31:11.896090: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

Traceback (most recent call last):

File "E:/[RemoteSync]/Shared/PycharmProjects/test/src/03.partial_derivative.py", line 8, in <module>

L = tf.reduce_sum (tf.square (tf.matmul (X, w) + b - y))

File "C:\Users\demo\AppData\Local\conda\conda\envs\tf2\lib\site-packages\tensorflow_core\python\util\dispatch.py", line 180, in wrapper

return target (*args, **kwargs)

File "C:\Users\demo\AppData\Local\conda\conda\envs\tf2\lib\site-packages\tensorflow_core\python\ops\math_ops.py", line 1595, in reduce_sum

_ReductionDims (input_tensor, axis))

File "C:\Users\demo\AppData\Local\conda\conda\envs\tf2\lib\site-packages\tensorflow_core\python\ops\math_ops.py", line 1473, in _ReductionDims

return constant_op.constant (np.arange (rank, dtype=np.int32))

File "C:\Users\demo\AppData\Local\conda\conda\envs\tf2\lib\site-packages\tensorflow_core\python\framework\constant_op.py", line 258, in constant

allow_broadcast=True)

File "C:\Users\demo\AppData\Local\conda\conda\envs\tf2\lib\site-packages\tensorflow_core\python\framework\constant_op.py", line 266, in _constant_impl

t = convert_to_eager_tensor (value, ctx, dtype)

File "C:\Users\demo\AppData\Local\conda\conda\envs\tf2\lib\site-packages\tensorflow_core\python\framework\constant_op.py", line 96, in convert_to_eager_tensor

return ops.EagerTensor (value, ctx.device_name, dtype)

ValueError: Attempt to convert a value (0) with an unsupported type (<class 'numpy.int32'>) to a Tensor.

Process finished with exit code 1

1 reply

April 2020

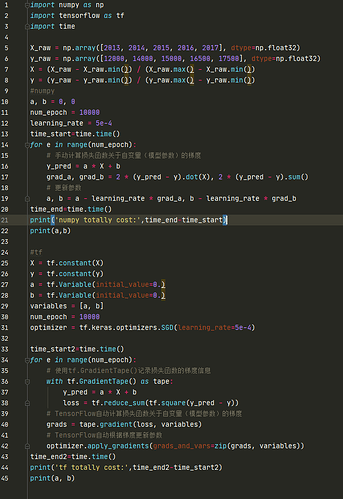

▶ laili731236225

请检查一下你的X变量的数据类型,如果是float64的话转为float32。

X_raw = np.array ([2013, 2014, 2015, 2016, 2017], dtype=np.float32)

y_raw = np.array ([12000, 14000, 15000, 16500, 17500], dtype=np.float32)

X = (X_raw - X_raw.min ()) / (X_raw.max () - X_raw.min ())

y = (y_raw - y_raw.min ()) / (y_raw.max () - y_raw.min ())

注意代码中的 dtype

2 replies

April 2020

▶ zknyy

请检查你的 TensorFlow 版本是否为 2.1 正式版

import tensorflow as tf

print (tf.__version__)

April 2020

▶ snowkylin

感谢回复! 上面的问题确实是数据类型的问题, 已经解决了. 可是在下一条语句又遇到了问题

<ipython-input-9-4a00192d6d84> in <module>

8 y = tf.constant (y)

9

---> 10 a = tf.Variable (initial_value=0.)

11 b = tf.Variable (initial_value=0.)

12 variables = [a, b]

InvalidArgumentError: assertion failed: [0] [Op:Assert] name: EagerVariableNameReuse

这里又出现了问题, 能麻烦大佬再帮忙解答一下吗

1 reply

April 2020

建议在 IDE 里新开一个.py文件,将要运行的代码写好,然后直接运行代码。在没有上下文的情况下很难确定 ipython 环境里的报错是怎么回事。

April 2020

▶ David_Lee

这里面并没有真正意义上的异常报错,基本都是提示信息。

April 2020

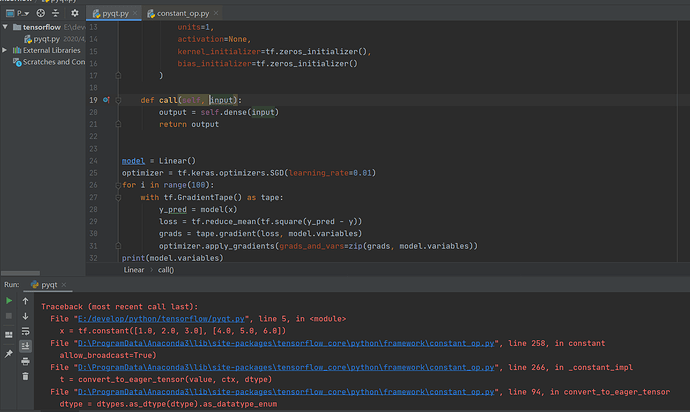

调用 call 函数时出现错误,谁能帮忙解答一下,谢谢!

1 reply

April 2020

▶ 话_陈

请提供完整的程序代码及报错信息。截图中无法看到完整的 Traceback 信息。

1 reply

April 2020

▶ snowkylin

TensorFlow 模型建立与训练 中的代码

import tensorflow as tf

x = tf.constant ([1.0, 2.0, 3.0], [4.0, 5.0, 6.0])

y = tf.constant ([10.0], [20.0])

class Linear (tf.keras.Model):

def init(self):

super ().init()

self.dense = tf.keras.layers.Dense (

units=1,

activation=None,

kernel_initializer=tf.zeros_initializer (),

bias_initializer=tf.zeros_initializer ()

)

def call (self, input):

output = self.dense (input)

return output

model = Linear ()

optimizer = tf.keras.optimizers.SGD (learning_rate=0.01)

for i in range (100):

with tf.GradientTape () as tape:

y_pred = model (x)

loss = tf.reduce_mean (tf.square (y_pred - y))

grads = tape.gradient (loss, model.variables)

optimizer.apply_gradients (grads_and_vars=zip (grads, model.variables))

print (model.variables)

错误信息:

D:\ProgramData\Anaconda3\python.exe E:/develop/python/tensorflow/pyqt.py

Traceback (most recent call last):

File “D:\ProgramData\Anaconda3\lib\site-packages\tensorflow_core\python\framework\constant_op.py”, line 92, in convert_to_eager_tensor

dtype = dtype.as_datatype_enum

AttributeError: ‘list’ object has no attribute ‘as_datatype_enum’

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “E:/develop/python/tensorflow/pyqt.py”, line 3, in

x = tf.constant ([1.0, 2.0, 3.0], [4.0, 5.0, 6.0])

File “D:\ProgramData\Anaconda3\lib\site-packages\tensorflow_core\python\framework\constant_op.py”, line 258, in constant

allow_broadcast=True)

File “D:\ProgramData\Anaconda3\lib\site-packages\tensorflow_core\python\framework\constant_op.py”, line 266, in _constant_impl

t = convert_to_eager_tensor (value, ctx, dtype)

File “D:\ProgramData\Anaconda3\lib\site-packages\tensorflow_core\python\framework\constant_op.py”, line 94, in convert_to_eager_tensor

dtype = dtypes.as_dtype (dtype).as_datatype_enum

File “D:\ProgramData\Anaconda3\lib\site-packages\tensorflow_core\python\framework\dtypes.py”, line 720, in as_dtype

return _ANY_TO_TF [type_value]

TypeError: unhashable type: ‘list’

1 reply

April 2020

▶ 话_陈

X 和 y 的建立过程中少了一层中括号。应该是下面的写法:

X = tf.constant ([[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]])

y = tf.constant ([[10.0], [20.0]])

1 reply

May 2020

with tf.GradientTape () as tape:

L = tf.reduce_sum (tf.square (tf.matmul (X, w) + b - y))

w_grad, b_grad = tape.gradient (L, [w, b])

请问这里 L 涉及到一个求和,是如何求得偏导的

1 reply

May 2020

▶ ikou-austin

May 2020

June 2020

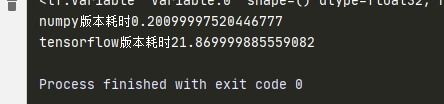

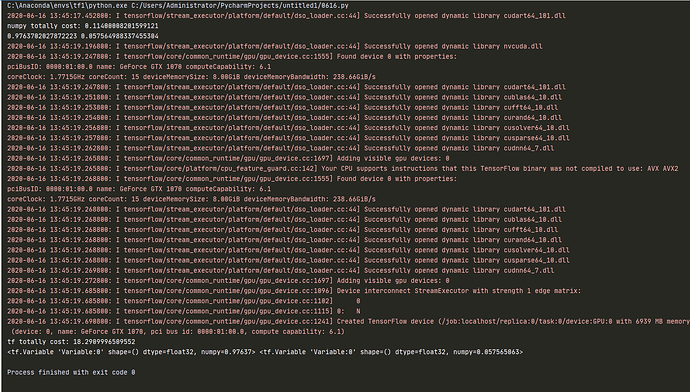

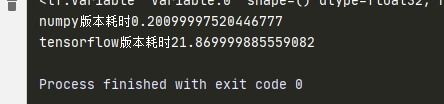

这个例子里为何 tensorflow 比 numpy 版本慢这么多

1 reply

June 2020

▶ Tanxinman

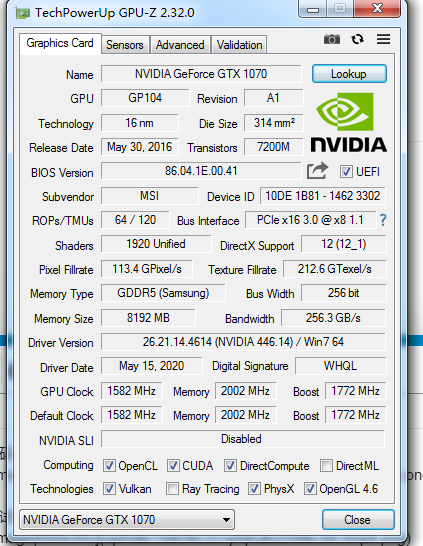

我这边没有发现这种现象。我猜可能你把 TensorFlow 的各种初始化的时间也算进去了。你可以贴一下你运行的完整代码和系统硬件配置(尤其是 GPU),同时把你的代码贴到 colab 里试试看。

1 reply

June 2020

我结合你的代码重新看了一下,确实是有这样的现象。我的理解是,这个例子中的计算相对而言非常简单(只有一个乘法和一个加法),计算量也非常小,所以没能发挥出 TensorFlow 在 GPU 方面的优势。同时每次都自动求导也有额外的开销,肯定不如已经手工写好的求导来得快。在这个例子中,如果我们把数据量增大,例如

X_raw = np.array (range (1, 1000000), dtype=np.float32)

y_raw = np.array (range (1, 1000000), dtype=np.float32)

learning_rate = 5e-7

你会发现 TensorFlow 的速度比 NumPy 快(NumPy 32.12s,TensorFlow 22.73s,Colab GPU 模式)。

August 2020

▶ snowkylin

链式求导本身我知道,只是题目中对 a b 求导,多个 x y 也叫链式吗

1 reply

August 2020

▶ David_Lee

都是 warning,前面应该是 numpy 等级高了,可以查一下你 tf 等级对应的 numpy 等级,或者直接进去改。把_np_quint8 = np.dtype ([(“quint8”, np.uint8, 1)]) 改成_np_quint8 = np.dtype ([(“quint8”, np.uint8, (1,))])

October 2020

请问在这里,如果用tensorflow求解梯度,需要自行计算梯度方程吗?还是只需设定损失函数,就可以自行计算梯度?

1 reply

November 2020

▶ HenryDu980219

November 2020

计算loss的时候为什么不计算平均loss呢,而是总的损失?

1 reply

March 2021

我的电脑是集成显卡,code如下

import tensorflow as tf

x = tf.Variable(initial_value=3.)

with tf.GradientTape() as tape:

y = tf.square(x)

y_grad = tape.gradient(y, x)

print(y, y_grad)

运行后结果没问题,但出现了一些提示,费解!请问是怎么回事呢?

tf.Tensor(9.0, shape=(), dtype=float32) tf.Tensor(6.0, shape=(), dtype=float32)

2021-03-09 13:56:02.374852: I tensorflow/compiler/jit/xla_cpu_device.cc:41] Not creating XLA devices, tf_xla_enable_xla_devices not set

2021-03-09 13:56:02.375186: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

1 reply

September 2021

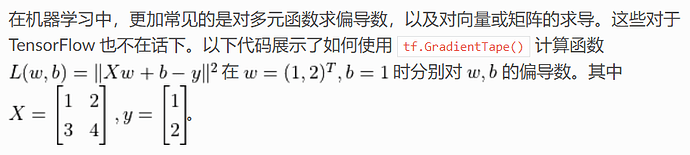

请问一下,这里如果手工计算L关于w、b的偏导数,该如何计算呢?

1 reply

September 2021

▶ tiebo.fu

\frac{\partial L(w,b)}{\partial w} = 2X^\top(Xw+b-y) = \begin{bmatrix}1 & 3 \\ 2 & 4\end{bmatrix}\begin{bmatrix}10 \\ 20\end{bmatrix} = \begin{bmatrix}70 \\ 100\end{bmatrix}

\frac{\partial L(w,b)}{\partial b} = 2\begin{bmatrix}1 & 1\end{bmatrix}(Xw+b-y) = \begin{bmatrix}1 & 1\end{bmatrix}\begin{bmatrix}10 \\ 20\end{bmatrix} = 30

参考 Matrix calculus - Wikipedia