参考 https://tf.wiki/zh_hans/basic/basic.html#zh-hans-optimizer ,指定待求导的函数 f 和求导的变量 x (也就是,哪个函数对哪个变量求导, \frac{\partial f}{\partial x} ),即可自动计算梯度。

计算loss的时候为什么不计算平均loss呢,而是总的损失?

两者没有本质区别,参考注释4

懂了,非常感谢

我的电脑是集成显卡,code如下

import tensorflow as tf

x = tf.Variable(initial_value=3.)

with tf.GradientTape() as tape:

y = tf.square(x)

y_grad = tape.gradient(y, x)

print(y, y_grad)

运行后结果没问题,但出现了一些提示,费解!请问是怎么回事呢?

tf.Tensor(9.0, shape=(), dtype=float32) tf.Tensor(6.0, shape=(), dtype=float32)

2021-03-09 13:56:02.374852: I tensorflow/compiler/jit/xla_cpu_device.cc:41] Not creating XLA devices, tf_xla_enable_xla_devices not set

2021-03-09 13:56:02.375186: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

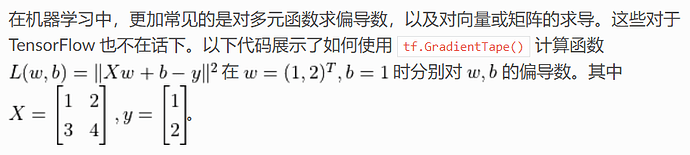

\frac{\partial L(w,b)}{\partial w} = 2X^\top(Xw+b-y) = \begin{bmatrix}1 & 3 \\ 2 & 4\end{bmatrix}\begin{bmatrix}10 \\ 20\end{bmatrix} = \begin{bmatrix}70 \\ 100\end{bmatrix}

\frac{\partial L(w,b)}{\partial b} = 2\begin{bmatrix}1 & 1\end{bmatrix}(Xw+b-y) = \begin{bmatrix}1 & 1\end{bmatrix}\begin{bmatrix}10 \\ 20\end{bmatrix} = 30