使用 SavedModel 完整导出模型里的 tf.load_saved_model 方法已经失效. 请及时更新.

你好,这边在 TensorFlow 2.1 下运行了一下示例代码,好像并没有问题。文章里并没有出现tf.load_saved_model这种写法,请您具体说明一下文章的哪一句话或者段落失效,谢谢!

你好,老师,我把模型保存,然后测试时将模型导入后,调用 model.call 时一直报错,我检查了一下代码,我的模型类继承的是 tf.keras.Model,但是我在 call 方面前面加了 tf.function 装饰器啊,不知道为什么还报这个错误

ValueError: Could not find matching function to call loaded from the SavedModel. Got:

Positional arguments (1 total):

* Tensor (“input:0”, shape=(60, 28, 28, 1), dtype=float64)

Keyword arguments: {}

Expected these arguments to match one of the following 1 option (s):

Option 1:

Positional arguments (1 total):

* TensorSpec (shape=(20, 28, 28, 1), dtype=tf.float32, name=‘input’)

Keyword arguments: {}

这是我的完整代码

读取命令行参数

arg=argparse.ArgumentParser ()

arg.add_argument ("–num_epoch",default=5)# 可选命令行参数一般都要给默认值

arg.add_argument ("–batch_size",default=20)

arg.add_argument ("–learning_rate",default=0.001)

arg.add_argument ("–mode",default=“train”)

res=arg.parse_args ()

创建的模型类

class Model (tf.keras.Model):

# 创建模型中的所有层

def init(self):

super ().init()# 要调用 super () 来进行父类的初始化

self.flatten=tf.keras.layers.Flatten ()

self.dense1=tf.keras.layers.Dense (100,activation=tf.nn.relu)

self.dense2=tf.keras.layers.Dense (10,activation=None)

# 写清楚模型前向运算过程

@tf.function

def call (self,input):

x=self.flatten (input)

x=self.dense1 (x)

x=self.dense2 (x)

output=tf.nn.softmax (x)#one-hot 分类任务中一般在最后面都要加 softmax 层

return output

创建的数据类

class Data ():

# 该构造函数读取数据集,并对数据集进行归一化、扩维

def init(self):

(self.train_x,self.train_y),(self.test_x,self.test_y)=tf.keras.datasets.mnist.load_data ()

self.train_x=np.expand_dims (self.train_x.astype (np.float)/255.0,axis=-1)

self.test_x=np.expand_dims (self.test_x.astype (np.float)/255.0,axis=-1)

self.train_y=self.train_y.astype (np.int)

self.test_y=self.test_y.astype (np.int)

self.train_num=self.train_x.shape [0]

self.test_num=self.test_x.shape [0]

# 从训练集中每次采样 batch_size 的样本并返回

def get_data (self,batch_size):

vals=np.random.randint (0,self.train_num,batch_size)

return self.train_x [vals,:],self.train_y [vals]

训练函数

def train ():

# 读取命令行的超参数并且进行模型类和数据类的实例化

num_epochs=int (res.num_epoch)# 命令行传进来的默认为 str 类型,这里注意要把这三个值转化成对应的数值类型

batch_size=int (res.batch_size)

lr=float (res.learning_rate)

model=Model ()

# 创建模型保存器,这里加上 CheckpointManager () 的原因是可以设置一些自己的模型保存方式

# checkpoint=tf.train.Checkpoint (SModel=model)

# manager=tf.train.CheckpointManager (checkpoint,'./save',max_to_keep=3)

data=Data ()

optimizer=tf.keras.optimizers.Adam (learning_rate=lr)

num_size=data.train_num//batch_size*num_epochs

log='log'

# 创建写算子,方便后面将一些参数值写入日志文件

writer=tf.summary.create_file_writer (log)

# 开启日志追踪器,可以将计算图和一些操作的运算时间保存下来

tf.summary.trace_on (graph=True,profiler=True)

for i in range (num_size):

x,y=data.get_data (batch_size)

# 梯度计算器里面进行的是模型前向运算和损失函数计算的过程

with tf.GradientTape () as tape:

y_pre=model (x)

loss=tf.keras.losses.sparse_categorical_crossentropy (y_true=y,y_pred=y_pre)

loss=tf.reduce_sum (loss)

# 将损失值写入日志文件

with writer.as_default ():

tf.summary.scalar ("loss",loss,step=i)

if i%100==0:

print ("训练批次为:{} 损失值为{}".format (i,loss))

grad=tape.gradient (loss,model.variables)

optimizer.apply_gradients (grads_and_vars=zip (grad,model.variables))

# if i%100==0:

# path=manager.save (checkpoint_number=i)# 给保存的 ckpt 打上编号,并且返回保存路径

# print ("模型保存到了:{}".format (path))

# 保存 track 信息到日志文件

with writer.as_default ():

tf.summary.trace_export ("trace",step=0,profiler_outdir=log)

tf.saved_model.save (model,'Trained_Models')

print ("模型保存完毕!")

训练函数

def test ():

# 往一个新的模型里面加载训练好的参数,这里是查找保存的最新的 ckpt 文件

# model=Model ()

# checkpoint=tf.train.Checkpoint (SModel=model)#SModel 名字要和保存时的名字相同

# checkpoint.restore (tf.train.latest_checkpoint (’./save’))

model=tf.saved_model.load (’./Trained_Models’)

print (“模型已经加载”)

data=Data ()

batch_size=int (res.batch_size)

num_size=data.test_num//batch_size

# 创建精度评估器

metri=tf.keras.metrics.SparseCategoricalAccuracy ()

for i in range (num_size):

x,y=data.test_x [i*batch_size:(i+1)*batch_size,:],data.test_y [i*batch_size:(i+1)*batch_size]

y_pre=model.call (x)

# 更新精度评估器

metri.update_state (y_true=y,y_pred=y_pre)

# 输出最终精度

print (“最终精度为:{}”.format (metri.result ()))

if name==‘main’:

if res.mode==‘train’:

train ()

if res.mode==‘test’:

test ()

这个错误提示看起来似乎是你在训练和测试时的 Batch Size 不一样。可以参考 https://tf.wiki/zh_hans/deployment/serving.html#keras 为@tf.function 设置 TensorSpec,使用 None 代表 Batch Size 大小可变。

谢谢了,问题解决了,是导入模型后预测时,数据类型的原因,我把 model.call 传入的参数改为了 tf.float32,就 ok 了

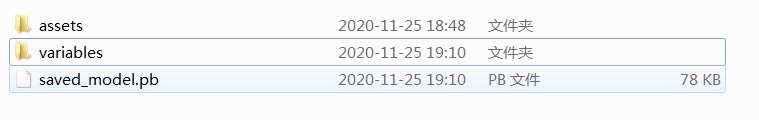

tf.saved_model.save (model, “保存的目标文件夹名称”) 里面好像没有地方指定保存的.pb 格式的模型的名称,save 和 load 只能使用文件夹来区分模型吗,模型的名字都必须是 saved_model.pb 吗

参考 https://www.tensorflow.org/guide/saved_model#the_savedmodel_format_on_disk ,默认情况似乎确实如此。如果有人发现有什么修改的方式也可以贴出来。

执行这段代码,报 ipykernel_launcher.py: error: unrecognized arguments:错误,将 parser.parse_args () 替换成 parser.parse_known_args ()[0],解决问题。代码如下,

%tb import tensorflow as tf

import numpy as np

import argparse

from zh.model.mnist.mlp import MLP

from zh.model.utils import MNISTLoader

parser = argparse.ArgumentParser (description=‘Process some integers.’)

parser.add_argument (’–mode’, default=‘train’, help=‘train or test’)

parser.add_argument (’–num_epochs’, default=1)

parser.add_argument (’–batch_size’, default=50)

parser.add_argument (’–learning_rate’, default=0.0001)

args = parser.parse_known_args ()[0]

data_loader = MNISTLoader ()

為什麼是用model.call而不是用model.predict?

在這裡的例子的話,對於使用繼承 tf.keras.Model 類別建立的 Keras 模型,是把 call 方法以 @tf.function 修飾,從而轉化為 SavedModel 支持的計算圖。如果你另外建立了一個 predict 方法並且也用 @tf.function 修飾轉換成計算圖,當然也可以調用 model.predict

请问,如何获取使用tf.saved_model.save()方法导出的.pb文件的所有节点名称呢?

pb(protocal buffer)是一个通用的序列化机制,不是TensorFlow专有的。比较直白的方式是参考Protocal Buffer的文档 https://developers.google.cn/protocol-buffers 进行操作。或者你也可以参考 https://mp.weixin.qq.com/s?__biz=MzU1OTMyNDcxMQ==&mid=2247487599&idx=1&sn=13a53532ad1d2528f0ece4f33e3ae143&chksm=fc185b27cb6fd2313992f8f2644b0a10e8dd7724353ff5e93a97d121cd1c7f3a4d4fcbcb82e8&scene=21#wechat_redirect 里面对计算图节点名字的比较。

keras它的模型保存,训练参数也保存吗,为啥我保存然后在另一个文件中调用,模型是"user_object"?

模型方法都调用不了。代码如下(版本tf 230)

保存:tf.saved_model.save(model,“model/1”)

加载:model = tf.saved_model.load(“model/1”)

路径没有问题的

报错:

Message=’_UserObject’ object has no attribute ‘call’

Source=F:\codes\vs_projects\pythonExample1\test.py

StackTrace:

File “F:\codes\vs_projects\pythonExample1\test.py”, line 40, in

y_pred = model.call(img/255.0)

请提供一下完整可运行的源代码

兄弟, 學一下這個吧, 不難

这是你的频道吗,赞啊!

我在做一个image caption的encoder-decoder模型,代码来自:https://www.tensorflow.org/tutorials/text/image_captioning,但是我无法将ecoder模型保存为h5的格式,报错的信息如下:

tf.saved_model.save(decoder,"./model/decoder.h5")

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-24-b537cb76540e> in <module>

----> 1 tf.saved_model.save(decoder,"./model/decoder.h5")

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/saved_model/save.py in save(obj, export_dir, signatures, options)

884 if signatures is None:

885 signatures = signature_serialization.find_function_to_export(

--> 886 checkpoint_graph_view)

887

888 signatures = signature_serialization.canonicalize_signatures(signatures)

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/saved_model/signature_serialization.py in find_function_to_export(saveable_view)

72 # If the user did not specify signatures, check the root object for a function

73 # that can be made into a signature.

---> 74 functions = saveable_view.list_functions(saveable_view.root)

75 signature = functions.get(DEFAULT_SIGNATURE_ATTR, None)

76 if signature is not None:

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/saved_model/save.py in list_functions(self, obj)

140 if obj_functions is None:

141 obj_functions = obj._list_functions_for_serialization( # pylint: disable=protected-access

--> 142 self._serialization_cache)

143 self._functions[obj] = obj_functions

144 return obj_functions

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/base_layer.py in _list_functions_for_serialization(self, serialization_cache)

2418 def _list_functions_for_serialization(self, serialization_cache):

2419 return (self._trackable_saved_model_saver

-> 2420 .list_functions_for_serialization(serialization_cache))

2421

2422 def __getstate__(self):

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/saving/saved_model/base_serialization.py in list_functions_for_serialization(self, serialization_cache)

89 `ConcreteFunction`.

90 """

---> 91 fns = self.functions_to_serialize(serialization_cache)

92

93 # The parent AutoTrackable class saves all user-defined tf.functions, and

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/saving/saved_model/layer_serialization.py in functions_to_serialize(self, serialization_cache)

78 def functions_to_serialize(self, serialization_cache):

79 return (self._get_serialized_attributes(

---> 80 serialization_cache).functions_to_serialize)

81

82 def _get_serialized_attributes(self, serialization_cache):

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/saving/saved_model/layer_serialization.py in _get_serialized_attributes(self, serialization_cache)

93

94 object_dict, function_dict = self._get_serialized_attributes_internal(

---> 95 serialization_cache)

96

97 serialized_attr.set_and_validate_objects(object_dict)

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/saving/saved_model/model_serialization.py in _get_serialized_attributes_internal(self, serialization_cache)

45 # cache (i.e. this is the root level object).

46 if len(serialization_cache[constants.KERAS_CACHE_KEY]) == 1:

---> 47 default_signature = save_impl.default_save_signature(self.obj)

48

49 # Other than the default signature function, all other attributes match with

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/saving/saved_model/save_impl.py in default_save_signature(layer)

210 original_losses = _reset_layer_losses(layer)

211 fn = saving_utils.trace_model_call(layer)

--> 212 fn.get_concrete_function()

213 _restore_layer_losses(original_losses)

214 return fn

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/def_function.py in get_concrete_function(self, *args, **kwargs)

907 if self._stateful_fn is None:

908 initializers = []

--> 909 self._initialize(args, kwargs, add_initializers_to=initializers)

910 self._initialize_uninitialized_variables(initializers)

911

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/def_function.py in _initialize(self, args, kwds, add_initializers_to)

495 self._concrete_stateful_fn = (

496 self._stateful_fn._get_concrete_function_internal_garbage_collected( # pylint: disable=protected-access

--> 497 *args, **kwds))

498

499 def invalid_creator_scope(*unused_args, **unused_kwds):

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py in _get_concrete_function_internal_garbage_collected(self, *args, **kwargs)

2387 args, kwargs = None, None

2388 with self._lock:

-> 2389 graph_function, _, _ = self._maybe_define_function(args, kwargs)

2390 return graph_function

2391

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py in _maybe_define_function(self, args, kwargs)

2701

2702 self._function_cache.missed.add(call_context_key)

-> 2703 graph_function = self._create_graph_function(args, kwargs)

2704 self._function_cache.primary[cache_key] = graph_function

2705 return graph_function, args, kwargs

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py in _create_graph_function(self, args, kwargs, override_flat_arg_shapes)

2591 arg_names=arg_names,

2592 override_flat_arg_shapes=override_flat_arg_shapes,

-> 2593 capture_by_value=self._capture_by_value),

2594 self._function_attributes,

2595 # Tell the ConcreteFunction to clean up its graph once it goes out of

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/framework/func_graph.py in func_graph_from_py_func(name, python_func, args, kwargs, signature, func_graph, autograph, autograph_options, add_control_dependencies, arg_names, op_return_value, collections, capture_by_value, override_flat_arg_shapes)

976 converted_func)

977

--> 978 func_outputs = python_func(*func_args, **func_kwargs)

979

980 # invariant: `func_outputs` contains only Tensors, CompositeTensors,

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/def_function.py in wrapped_fn(*args, **kwds)

437 # __wrapped__ allows AutoGraph to swap in a converted function. We give

438 # the function a weak reference to itself to avoid a reference cycle.

--> 439 return weak_wrapped_fn().__wrapped__(*args, **kwds)

440 weak_wrapped_fn = weakref.ref(wrapped_fn)

441

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/saving/saving_utils.py in _wrapped_model(*args)

148 with base_layer_utils.call_context().enter(

149 model, inputs=inputs, build_graph=False, training=False, saving=True):

--> 150 outputs_list = nest.flatten(model(inputs=inputs, training=False))

151

152 try:

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/base_layer.py in __call__(self, inputs, *args, **kwargs)

776 outputs = base_layer_utils.mark_as_return(outputs, acd)

777 else:

--> 778 outputs = call_fn(cast_inputs, *args, **kwargs)

779

780 except errors.OperatorNotAllowedInGraphError as e:

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/def_function.py in __call__(self, *args, **kwds)

566 xla_context.Exit()

567 else:

--> 568 result = self._call(*args, **kwds)

569

570 if tracing_count == self._get_tracing_count():

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/def_function.py in _call(self, *args, **kwds)

597 # In this case we have created variables on the first call, so we run the

598 # defunned version which is guaranteed to never create variables.

--> 599 return self._stateless_fn(*args, **kwds) # pylint: disable=not-callable

600 elif self._stateful_fn is not None:

601 # Release the lock early so that multiple threads can perform the call

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py in __call__(self, *args, **kwargs)

2360 """Calls a graph function specialized to the inputs."""

2361 with self._lock:

-> 2362 graph_function, args, kwargs = self._maybe_define_function(args, kwargs)

2363 return graph_function._filtered_call(args, kwargs) # pylint: disable=protected-access

2364

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py in _maybe_define_function(self, args, kwargs)

2701

2702 self._function_cache.missed.add(call_context_key)

-> 2703 graph_function = self._create_graph_function(args, kwargs)

2704 self._function_cache.primary[cache_key] = graph_function

2705 return graph_function, args, kwargs

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py in _create_graph_function(self, args, kwargs, override_flat_arg_shapes)

2591 arg_names=arg_names,

2592 override_flat_arg_shapes=override_flat_arg_shapes,

-> 2593 capture_by_value=self._capture_by_value),

2594 self._function_attributes,

2595 # Tell the ConcreteFunction to clean up its graph once it goes out of

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/framework/func_graph.py in func_graph_from_py_func(name, python_func, args, kwargs, signature, func_graph, autograph, autograph_options, add_control_dependencies, arg_names, op_return_value, collections, capture_by_value, override_flat_arg_shapes)

976 converted_func)

977

--> 978 func_outputs = python_func(*func_args, **func_kwargs)

979

980 # invariant: `func_outputs` contains only Tensors, CompositeTensors,

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/def_function.py in wrapped_fn(*args, **kwds)

437 # __wrapped__ allows AutoGraph to swap in a converted function. We give

438 # the function a weak reference to itself to avoid a reference cycle.

--> 439 return weak_wrapped_fn().__wrapped__(*args, **kwds)

440 weak_wrapped_fn = weakref.ref(wrapped_fn)

441

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py in bound_method_wrapper(*args, **kwargs)

3209 # However, the replacer is still responsible for attaching self properly.

3210 # TODO(mdan): Is it possible to do it here instead?

-> 3211 return wrapped_fn(*args, **kwargs)

3212 weak_bound_method_wrapper = weakref.ref(bound_method_wrapper)

3213

~/anaconda3/envs/SmallVideo/lib/python3.7/site-packages/tensorflow_core/python/framework/func_graph.py in wrapper(*args, **kwargs)

966 except Exception as e: # pylint:disable=broad-except

967 if hasattr(e, "ag_error_metadata"):

--> 968 raise e.ag_error_metadata.to_exception(e)

969 else:

970 raise

TypeError: in converted code:

TypeError: tf__call() missing 2 required positional arguments: 'features' and 'hidden'

也就是说decoder模型的输入依赖于encoder的输出:‘features’ and ‘hidden’,在这种情况下我怎么保存decoder呢?查了好多资料也不知道怎么弄,谢谢。