#!/usr/bin/env python

# -*- coding: UTF-8 -*-

from __future__ import print_function

import numpy as np

from sklearn.model_selection import train_test_split

import tensorflow as tf

from tensorflow.contrib.tensor_forest.python import tensor_forest

from tensorflow.python.ops import resources

def load_data (file):

features = []

lables = []

file = open (file, 'r')

lines = file.readlines ()

for line in lines:

items = line.strip ().split (',')

list_to_string = ','.join (items)

for ch in ['Iris-setosa']:

if ch in list_to_string:

list_to_string = list_to_string.replace (ch, '0')

for ch in ['Iris-versicolor']:

if ch in list_to_string:

list_to_string = list_to_string.replace (ch, '1')

for ch in ['Iris-virginica']:

if ch in list_to_string:

list_to_string = list_to_string.replace (ch, '2')

items = list_to_string.strip ().split (',')

features.append ([float (items [i]) for i in range (len (items) - 1)])

lables.append (float (items [-1]))

return np.array (features), np.array (lables)

if __name__ == '__main__':

data, lables = load_data ('iris.csv')

train_data, test_data, train_lables, test_lables = train_test_split (data, lables, test_size=0.3, random_state=33)

# Parameters

num_steps = 1000

num_classes = 3

num_features = 4

num_trees = 10

max_nodes = 100

# Input and Target data

X = tf.placeholder (tf.float32, shape=[None, num_features])

# For random forest, labels must be integers (the class id)

Y = tf.placeholder (tf.int32, shape=[None])

# Random Forest Parameters

hparams = tensor_forest.ForestHParams (num_classes=num_classes,

num_features=num_features,

num_trees=num_trees,

max_nodes=max_nodes).fill ()

# Build the Random Forest

forest_graph = tensor_forest.RandomForestGraphs (hparams)

# Get training graph and loss

train_op = forest_graph.training_graph (X, Y)

loss_op = forest_graph.training_loss (X, Y)

# Measure the accuracy

infer_op = forest_graph.inference_graph (X)

correct_prediction = tf.equal (tf.argmax (infer_op, 1), tf.cast (Y, tf.int64))

accuracy_op = tf.reduce_mean (tf.cast (correct_prediction, tf.float32))

# Initialize the variables (i.e. assign their default value) and forest resources

init_vars = tf.group (tf.global_variables_initializer (),

resources.initialize_resources (resources.shared_resources ()))

# Start TensorFlow session

sess = tf.Session ()

# Run the initializer

sess.run (init_vars)

# Training

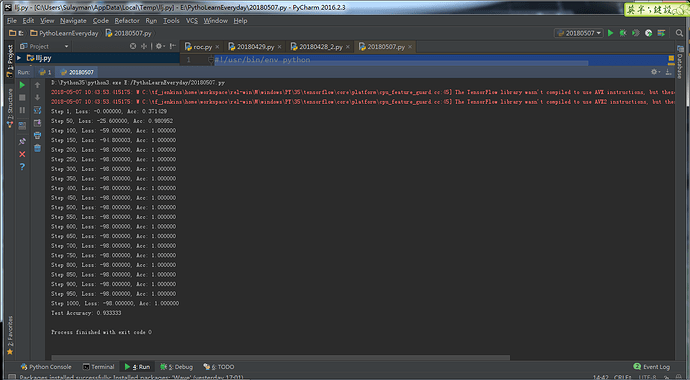

for i in range (1, num_steps + 1):

_, l = sess.run ([train_op, loss_op], feed_dict={X: train_data, Y: train_lables})

if i % 50 == 0 or i == 1:

acc = sess.run (accuracy_op, feed_dict={X: train_data, Y: train_lables})

print ('Step %i, Loss: %f, Acc: %f' % (i, l, acc))

# Test Model

print ("Test Accuracy:", sess.run (accuracy_op, feed_dict={X: test_data, Y: test_lables}))

提问人:M 丶 Sulayman,发表时间:2018-5-7 10:51:38